Dynamic Gaussian Splatting approaches have achieved remarkable performance for 4D scene reconstruction.

However, these approaches rely on dense-frame video sequences for photorealistic reconstruction.

In real-world scenarios, due to equipment constraints, sometimes only sparse frames are accessible.

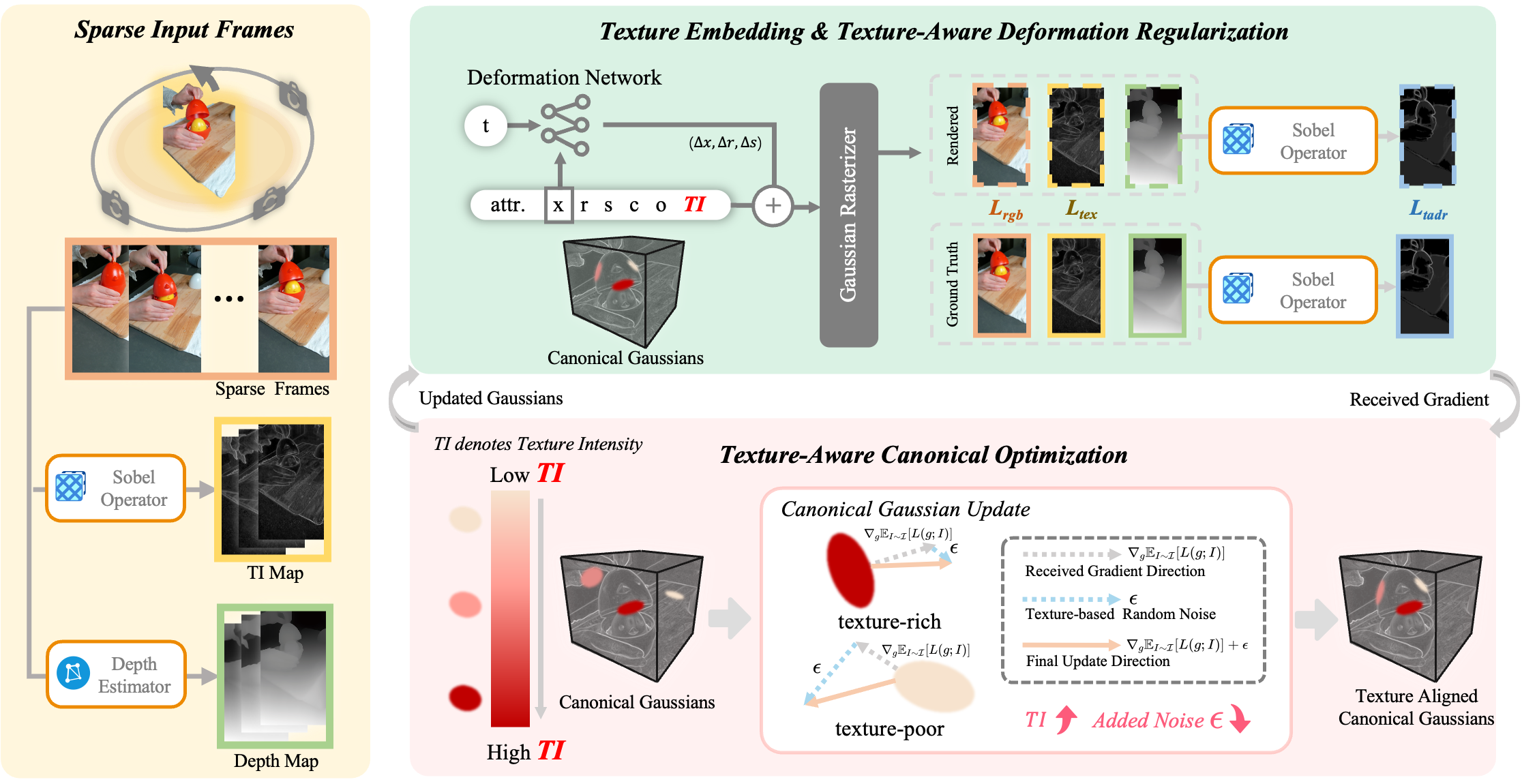

In this paper, we propose Sparse4DGS, the first method for sparse-frame dynamic scene reconstruction.

We observe that dynamic reconstruction methods fail in both canonical and deformed spaces under sparse-frame settings,

especially in areas with high texture richness.

Sparse4DGS tackles this challenge by focusing on texture-rich areas.

For the deformation network, we propose Texture-Aware Deformation Regularization,

which introduces a texture-based depth alignment loss to regulate Gaussian deformation.

For the canonical Gaussian field, we introduce Texture-Aware Canonical Optimization,

which incorporates texture-based noise into the gradient descent process of canonical Gaussians.

Extensive experiments show that when taking sparse frames as inputs,

our method outperforms existing dynamic or few-shot techniques on NeRF-Synthetic,

HyperNeRF, NeRF-DS, and our iPhone-4D datasets.